How to Better Structure AWS S3 Security

If the new IT intern suggests that you install a publicly accessible web server on your core file server – you might suggest that they be fired.

If they give up on that, but instead decide to dump the reports issuing from your highly sensitive data warehouse jobs to your webserver – they’d definitely be fired.

But things aren’t always so clear in the brave new world of the cloud – where services like Amazon’s Simple Storage Service (S3), which performs multiple, often overlapping roles in an application stack, is always one click away from exposing your sensitive files online.

Cloud storage services are now more than merely “a place to keep a file” – they often serve as both inputs and outputs to more elaborate chains of processes. The end result of all of this is the recent spate of high profile data breaches that have stemmed from S3 buckets.

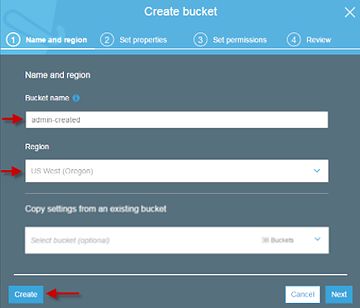

Buckets are the top level organizational resource within S3 and are always assigned a DNS addressable name. Ex: http://MyCompanyBucket.s3.amazonaws.com

This might trick you into thinking of a bucket like a server, where you might create multiple hierarchies within a shared folder for each group that needs access within your organization.

Here’s the thing:

•There’s no cost difference between creating 1 bucket and a dozen

•By default you’re limited to a 100 buckets, but getting more is as simple as making a support request.

•There is no performance difference between accessing a 100 files on one bucket or 1 file in a 100 different buckets.

With these facts in mind, we need to steal a concept from computer science class: the Single Responsibility Principle.

Sidebar: A warning sign is often found in the bucket naming. Generic, general names like: ‘mycompany’ or ‘data-store’ are asking for trouble. Ideally you should establish a naming convention like: companyname-production/staging/development-applicationname

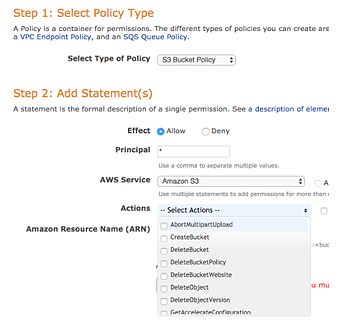

They define:

•Who can access a bucket (what users/principals)

•How they can access it (http only, using MFA)

•Where they can access it from (a Virtual Private Cloud, specific IP)

Benefit #1 of organizing your buckets into narrowly defined roles: your bucket policies will be an order of magnitude simpler, since you won’t have to try to puzzle out conflicting policy statements or even just read through (up to 20kb!) of JSON to try and reason out the implications of a change.

Example Bucket Policy

1

2

3{

4 “Version”: “2012-10-17”,

5 “Id”: “S3PolicyId1”,

6 “Statement”: [

7 {

8 “Sid”: “IPAllow”,

9 “Effect”: “Allow”,

10 “Principal”: “*”,

11 “Action”: “s3:*”,

12 “Resource”: “arn:aws:s3:::examplebucket/*”,

13 “Condition”: {

14 “IpAddress”: {“aws:SourceIp”: “54.240.143.0/24”},

15 “NotIpAddress”: {“aws:SourceIp”: “54.240.143.188/32”}

16 }

17 }

18 ]

19}

20

21

Narrow buckets mean simpler policies, which in turn mean less likelihood of accidentally over permissioning users – and unintentionally creating a data breach.

Think of Bucket Policies as how the data should be treated.

IAM Policies in S3

Reviewing access periodically can give you great insight into if your data is being accessed from an unknown location, or in the case of a data breach, how and when exfiltration occurred.

More recently, AWS Athena was launched. It’s a new service that lets you directly run SQL queries against structured data sources like JSON, CSV and log files stored in S3.